Table of Contents

In an alarming revelation, security flaws in Open-Source ML frameworks have been discovered by researchers, potentially exposing organizations to remote code execution (RCE) and data breaches.

Popular machine learning (ML) frameworks like MLflow, H2O, PyTorch, and MLeap are impacted, emphasizing the critical need for robust cybersecurity practices in AI systems.

Key Takeaway to Security Flaws in Open-Source ML Frameworks:

- Security Flaws in Open-Source ML Frameworks: The vulnerabilities in open-source ML frameworks could allow attackers to execute malicious code and steal sensitive data, highlighting significant risks for organizations.

Cybersecurity experts have identified critical security flaws in open-source ML frameworks used widely across industries. These flaws could let attackers take control of ML systems, steal sensitive information, and even execute harmful code.

JFrog, a leading supply chain security company, discovered 22 vulnerabilities, including new client-side issues affecting tools like MLflow, H2O, PyTorch, and MLeap.

These findings underscore the importance of vigilance when working with AI and machine learning technologies.

What Are the Newly Discovered Flaws?

The flaws, primarily client-side issues, impact libraries handling supposedly safe model formats like Safetensors.

These vulnerabilities pose severe risks to organizations using these frameworks for AI and ML operations.

Highlighted Vulnerabilities

| Framework | Vulnerability | Potential Impact | Severity (CVSS) |

|---|---|---|---|

| MLflow | Insufficient sanitization in Jupyter Notebooks, leading to cross-site scripting and RCE | Remote Code Execution (RCE) | 7.2 |

| H2O | Unsafe deserialization when importing untrusted ML models | RCE | 7.5 |

| PyTorch | Path traversal in TorchScript, allowing denial-of-service (DoS) or file overwrite | System File Corruption | Not assigned |

| MLeap | Path traversal in zipped models, enabling arbitrary file overwrite and possible code execution | RCE | 7.5 |

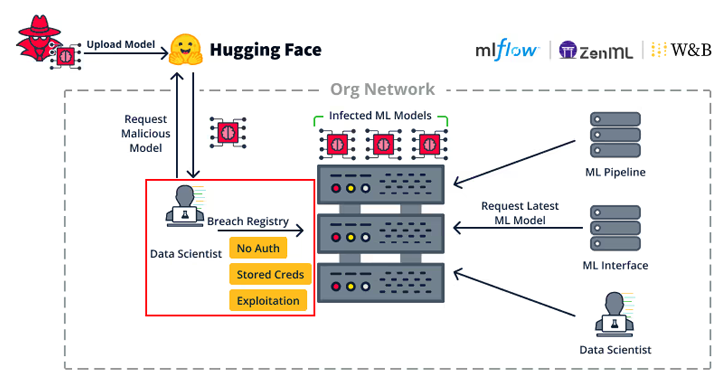

These vulnerabilities could allow attackers to exploit ML clients to access sensitive services like ML registries or pipelines, leading to widespread damage.

How Do These Flaws Impact Organizations?

If an attacker compromises an ML client, they can gain access to critical systems. For example, ML clients often interact with model registries or MLOps pipelines, which store sensitive credentials and models.

An attacker could use these access points to inject malicious models, leading to severe breaches or disruptions.

In a real-life example, compromised ML pipelines in 2023 led to significant downtime for a healthcare company. This attack allowed hackers to manipulate patient data models, causing delays in diagnoses. Read more about that case here.

Why Safe Formats Aren’t Always Safe

JFrog’s researchers caution that even “safe” formats like Safetensors aren’t immune. These formats, designed to protect ML models from tampering, can still be exploited to execute arbitrary code.

This revelation highlights the importance of vetting all models before use, regardless of their source.

Expert Recommendations to Stay Safe

Shachar Menashe, JFrog’s VP of Security Research, offers practical advice to protect against these threats:

- Verify Model Sources: Always validate the origin and integrity of ML models before using them.

- Avoid Untrusted Models: Never load models from unverified sources, even from safe ML repositories.

- Secure Your ML Environment: Implement strong access controls and isolate ML systems from other parts of the network.

For a detailed guide on securing ML pipelines, check out this resource.

Steps to Protect Your ML Systems

Here are practical tips to safeguard your organization from the risks posed by these vulnerabilities:

| Action | Why It’s Important |

|---|---|

| Update Frameworks Regularly | Ensures you have the latest security patches. |

| Use Antivirus Solutions | Detects and mitigates potential malware threats. |

| Implement Zero Trust Principles | Limits access to critical systems, reducing potential attack surfaces. |

| Educate Your Team | Awareness reduces human errors that could expose your systems. |

Want a deeper dive into these strategies? Visit this guide.

Rounding Up

The discovery of security flaws in open-source ML frameworks is a wake-up call for organizations relying on AI and machine learning. These vulnerabilities emphasize the importance of securing ML environments and carefully vetting tools before integrating them into workflows.

With threats like RCE on the rise, proactive measures are the only way to ensure safety in this rapidly evolving digital age.

About JFrog

JFrog is a global leader in DevOps and software supply chain security. Their research team regularly uncovers vulnerabilities that impact industries worldwide. With a focus on protecting organizations from software threats, JFrog provides tools and insights to enhance security across systems.

FAQ

What are the newly discovered flaws in ML frameworks?

The flaws include issues like path traversal, unsafe deserialization, and cross-site scripting, all of which can lead to remote code execution.

Which frameworks are affected?

Popular tools like MLflow, H2O, PyTorch, and MLeap have been impacted by these vulnerabilities.

How can these flaws be exploited?

Attackers can use these flaws to access sensitive ML services, overwrite files, or execute malicious code.

What should I do if my organization uses these frameworks?

Update your systems, verify ML models before use, and implement strict security measures in your ML pipelines.

Why is Safetensors still vulnerable?

While Safetensors is designed for secure model storage, attackers can exploit it to execute arbitrary code if not handled properly.